I feel like one day that “no guarantee of merchantability or fitness for any particular purpose” thing will have to give.

I feel like one day that “no guarantee of merchantability or fitness for any particular purpose” thing will have to give.

There’s room for some nuance there. They make some reasonable predictions, like chatbot use seems likely to enter the dsm as a contributing factor for psychosis, and they’re all experience systems programmers who immediately shot down Willison when he said that an llm-generated device driver would be fine, because device drivers either obviously work or obviously don’t, but then fall foul of the old gell-mann amnesia problem.

Certainly, their past episodes have been good, and the back catalogue stretches back quite some time, but I’m not particularly interested in that sort of discussion here.

My gloomy prediction is that (b) is the way things will go, at least in part because there are fewer meaningful consequences for producing awful software, and if you started from something that was basically ok it’ll take longer for you to fail.

Startups will be slopcoded and fail quick, or be human coded but will struggle to distinguish themselves well enough to get customers and investment, especially after the ai bubble pops and we get a global recession.

The problems will eventually work themselves out of the system one way or another, because people would like things that aren’t complete garbage and will eventually discover how to make and/or buy them, but it could take years for the current damage to go away.

I don’t like being a doomer, but it is hard to be optimistic about the sector right now.

If that won’t sell it to governments around the world, I don’t know what will. Elon’s on to a winner with that strategy.

Ugh, I carried to listening to the episode in the hopes it might get better, but it didn’t deliver.

I don’t understand how people can say, with a straight face, that ai isn’t coming for your job and it is just going to make everyone more productive. Even if you ignore all the externalities of providing llm services (which is a pretty serious thing to ignore), have they not noticed the vast sweeping layoffs in the tech industry alone, let alone the damage to other sectors? They seem to be aware that the promise of the bubble is that agi will replace human labour, but seem not to think any harder about that.

Also, Willison thinks that a world without work would be awful, and that people need work to give their lives meaning and purpose and bruh. I cannot even.

Been listening to the latest oxide and friends podcast (predictions 2026), and ugh, so much incoherent ai boosting.

They’re an interesting company doing interesting things with a lot of very capable and clever engineers, but every year the ai enthusiasm ramps up, to the point where it seems like they’re not even listening to the things they’re saying and how they’re a little bit contradictory… “everyone will be a 10x vibe coder” and “everything will be made with some level of llm assistance in the near future” vs “no-one should be letting llms access anything where they could be doing permanent damage” and “there’s so much worthless slop in crates.io”. There’s enthusing over llm law firms, without any awareness of the recent robin ai collapse. Talk of llms generating their own programming language that isn’t readily human readable but is somehow more convenient for llms to extrude, but also talking about the need for more human review of vibe code. Simon Willison is there.

I feel like there’s a certain kind of very smart and capable vibe coder who really cannot imagine how people can and are using these tools to avoid having to think or do anything, and aren’t considering what an absolute disaster this is for everything and everyone.

Anyway, I can recommend skipping this episode and only bothering with the technical or more business oriented ones, which are often pretty good.

That’s horrifying. The whole thing reads like an over-elaborate joke poking fun at vibe-coders.

It’s like someone looked at the javascript ecosystem of tools and libraries and thought that it was great but far too conservative and cautious and excessively engineered. (fwiw, yegge kinda predicted the rise of javascript back in the day… he’s had some good thoughts on the software industry, but I don’t think this latest is one of them)

So now we have some kind of meta-vibe-coding where someone gets to play at being a project manager whilst inventing cutesy names and torching huge sums of money… but to what end?

Aside from just keeping Gas Town on the rails, probably the hardest problem is keeping it fed. It churns through implementation plans so quickly that you have to do a LOT of design and planning to keep the engine fed.

Apart from a “haha, turns out vide coding isn’t vibe engineering” (because I suspect that “design” and “plan” just mean “write more prompts and hope for the best”) I have to ask again: to what end? what is being accomplished here? Where are the great works of agentic vibe coding? This whole thing just seems like it could have been avoided by giving steve a copy of factorio or something, and still generated as many valuable results.

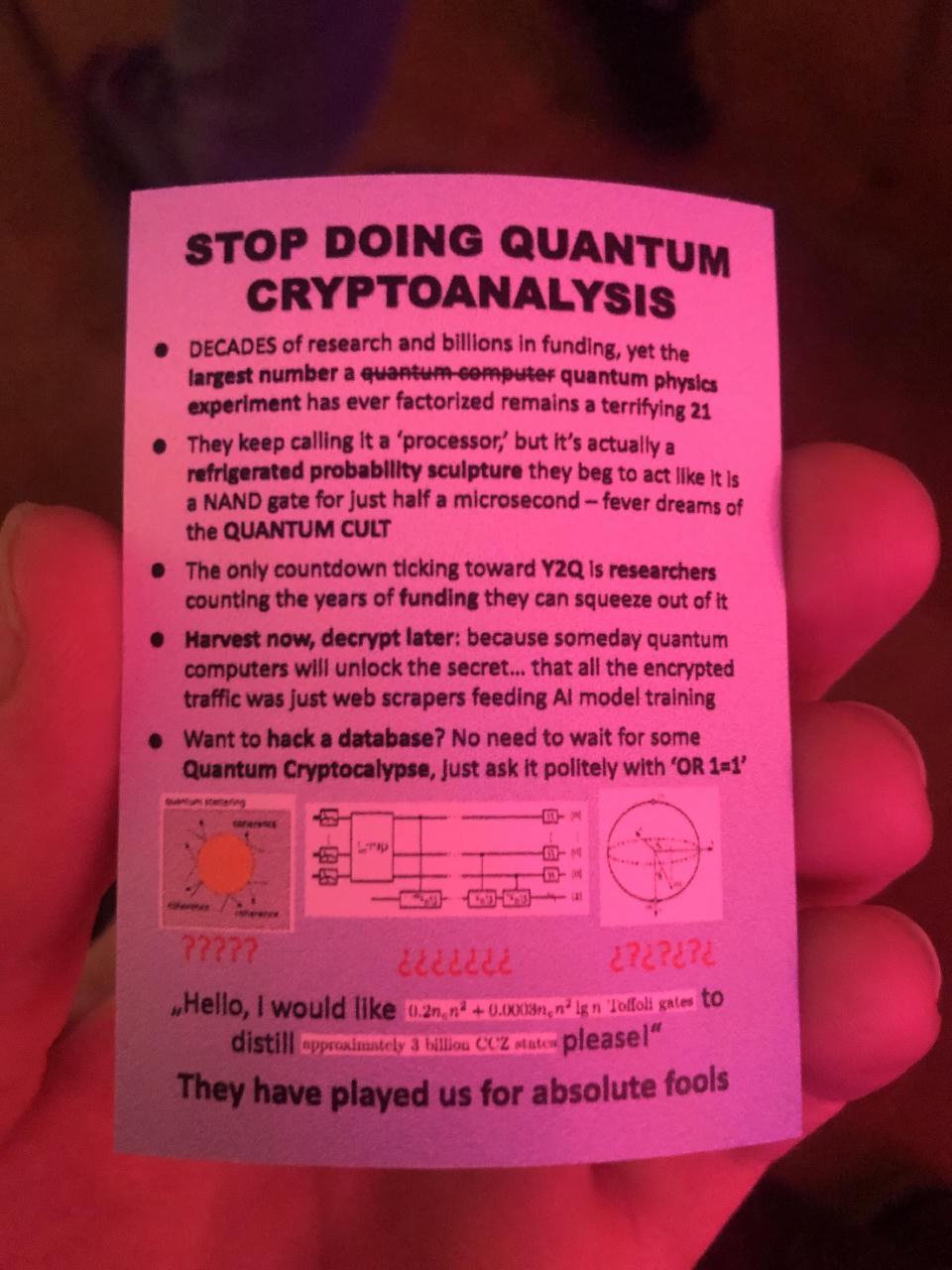

How about some quantum sneering instead of ai for a change?

They keep calling it a ‘processor,’ but it’s actually a refrigerated probability sculpture they beg to act like it Is a NAND gate for just half a microsecond

“Refrigerated probability sculpture” is outstanding.

Photo is from the recent CCC, but I can’t find where I found the image, sorry.

A photograph of a printed card bearing the text:

STOP DOING QUANTUM CRYPTOANALYSIS

(I can’t actually read the final bit, so I can’t describe it for you, apologies)

They have played us for absolute fools.

A lot of the money behind lean is from microsoft, so a push for more llm integration is depressing but unsurprising.

Turns out though that llms might actually be ok for generating some kinds of mathematical proofs so long as you’ve formally specified the problem and have a mechanical way to verify the solution (which is where lean comes in). I don’t think any other problem domain that llms have been used in is like that, so successes here can’t be applied elsewhere. I also suspect that a much, uh, leaner specialist model would do just as good a job there. As always, llms are overkill that can only be used when someone else is subsidising them.

This is a fun read: https://nesbitt.io/2025/12/27/how-to-ruin-all-of-package-management.html

Starts out strong:

Prediction markets are supposed to be hard to manipulate because manipulation is expensive and the market corrects. This assumes you can’t cheaply manufacture the underlying reality. In package management, you can. The entire npm registry runs on trust and free API calls.

And ends well, too.

The difference is that humans might notice something feels off. A developer might pause at a package with 10,000 stars but three commits and no issues. An AI agent running npm install won’t hesitate. It’s pattern-matching, not evaluating.

I suppose it was inevitable that the insufferable idiocy that software folk inflict on other fields would eventually be turned against their own kind.

And xkcd comic.

Long haired woman: or field has been struggling with this problem for years!

Laptop wielding techbro: struggle no more! I’m here to solve it with algorithms.

6 months later:

Techbro: this is really hard Woman: You don’t say.

Sunday afternoon slack period entertainment: image generation prompt “engineers” getting all wound up about people stealing their prompts and styles and passing off hard work as their own. Who would do such a thing?

https://bsky.app/profile/arif.bsky.social/post/3mahhivnmnk23

@Artedeingenio

Never do this: Passing off someone else’s work as your own.

This Grok Imagine effect with the day-to-night transition was created by me — and I’m pretty sure that person knows it. To make things worse, their copy has more impressions than my original post.

Not cool 👎

Ahh, sweet schadenfreude.

I wonder if they’ve considered that it might actually be possible to get a reasonable imitation of their original prompt by using an llm to describe the generated image, and just tack on “more photorealistic, bigger boobies” to win at imagine generation.

Hah! Well found. I do recall hearing about another simulated vendor experiment (that also failed) but not actual dog-fooding. Looks like the big upgrade the wsj reported on was the secondary “seymour cash” 🙄 chatbot bolted on the side… the main chatbot was still claude v3.7, but maybe they’d prompted it harder and called that an upgrade.

I wonder if anthropic trialled that in house, and none of them were smart enough to break it, and that’s what lead to the external trial.

So, I’m taking this one with a pinch of salt, but it is entertaining: “We Let AI Run Our Office Vending Machine. It Lost Hundreds of Dollars.”

The whole exercise was clearly totally pointless and didn’t solve anything that needed solving (like every other “ai” project, i guess) but it does give a small but interesting window into the mindset of people who have only one shitty tool and are trying to make it do everything. Your chatbot is too easily lead astray? Use another chatbot to keep it in line! Honestly, I thought they were already doing this… I guess it was just to expensive or something, but now the price/desperation curves have intersected

Anthropic had already run into many of the same problems with Claudius internally so it created v2, powered by a better model, Sonnet 4.5. It also introduced a new AI boss: Seymour Cash, a separate CEO bot programmed to keep Claudius in line. So after a week, we were ready for the sequel.

Just one more chatbot, bro. Then prompt injection will become impossible. Just one more chatbot. I swear.

Anthropic and Andon said Claudius might have unraveled because its context window filled up. As more instructions, conversations and history piled in, the model had more to retain—making it easier to lose track of goals, priorities and guardrails. Graham also said the model used in the Claudius experiment has fewer guardrails than those deployed to Anthropic’s Claude users.

Sorry, I meant just one more guardrail. And another ten thousand tokens capacity in the context window. That’ll fix it forever.

So, curse you for making me check the actual source material (it was freely available online, which seems somehow heretical. I was intending to torrent it, and I’m almost disappointed I didn’t have to) and it seems I’m wrong here… the anconia copper mine in the gulch produced like a pound of copper, and they use mules for transporting stuff to and from the mine. The oil well is still suspicious, but it just gets glossed over.

I’m not prepared to read any more than that, so if there was anything else about automation in there I didn’t see it. I’d forgotten the sheer volume of baseless smug that libertarian literature exudes.

I can’t find any good sources right now, I’m absolutely not going to find a copy of that particular screed and look up the relevant bits, but I think the oil wells in galts gulch were automated, with self driving trucks, I think? It might also be implicit in the goods that are produced there, but that is drifting a bit into fanfic, I admit.

Anyway, between boston dynamics and the endless supply of rand fans on the internet, it’s very hard to research without reading the damn thing.

If I find a new source, I’ll report back.

They had robots in galts gulch, which means that all businesses need them. If you aren’t randmaxxing 24/7, can you really call yourself a technological visionary at the vanguard of the libertarian master race?

Of all the environments that you might want to rearrange to facilitate non-humanoid labour, surely warehouses are the easiest. There’s even a whole load of pre-existing automated warehousing stuff out there already. Wheels, castors, conveyors, scissor lifts… most humans don’t have these things, and they’re ideal for moving box-like things around.

Industrialisation and previous waves of automation have lead to workplaces being rearranged to make things cheaper or faster to make, or both, but somehow the robot companies think this won’t happen again? The only thing that seems to be different this time around, is that llms have shown that the world’s c-suites are packed with deeply gullible people and we now have a load of new technology for manipulating and exploiting them.

Much of the content of mythical man month is still depressingly relevant, especially in conjunction with brooks’ later stuff like no silver bullets. A lot of senior tech management either never read it, or read it so long ago that they forgot the relevant points beyond the title.

It’s interesting that clausewitz doesn’t appear in lw discussions. That seems like a big point in favour of his writing.

A fun little software exercise with no real world uses at all: https://drewmayo.com/1000-words/about.html

Turns out that if you stuff the right shaped bytes into png image tEXt chunks (which don’t get compressed), the base64 encoded form of that image has sections that look like human readable text.